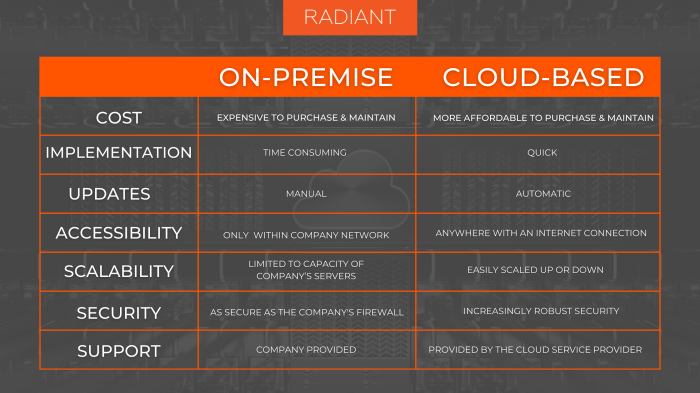

Comparing cloud-based and on-premise business intelligence solutions is a crucial decision for any organization. The choice hinges on a complex interplay of factors: cost, security, scalability, and integration capabilities. This deep dive explores the key differences, helping you navigate the decision-making process and select the BI solution that best aligns with your business needs and budget.

From initial investment costs and ongoing maintenance to data security protocols and scalability options, we’ll dissect the advantages and disadvantages of each approach. We’ll also examine the crucial aspects of data integration, vendor lock-in, and the level of technical expertise required for successful implementation. Ultimately, understanding these nuances will empower you to make an informed choice that drives your business forward.

Cost Comparison

Choosing between cloud-based and on-premise Business Intelligence (BI) solutions often boils down to budget. Understanding the cost implications of each is crucial for making an informed decision that aligns with your company’s financial resources and growth trajectory. Let’s break down the financial aspects to help you navigate this critical choice.

Initial Investment Costs

The initial outlay for cloud-based and on-premise BI solutions differs significantly. Cloud solutions generally require less upfront investment, while on-premise deployments involve substantial initial costs for hardware and software. The following table illustrates a typical comparison:

| Feature | Cloud-Based BI | On-Premise BI |

|---|---|---|

| Setup Fees | Relatively low; often included in subscription | Can be substantial, covering hardware procurement, installation, and network configuration. |

| Software Licenses | Subscription-based, typically monthly or annual fees. | One-time purchase, but potential for expensive upgrades and maintenance contracts. |

| Hardware Requirements | Minimal; users need only a computer and internet connection. | Significant; requires servers, storage devices, network infrastructure, and potentially dedicated IT personnel. Example: A mid-sized company might need a server costing $10,000, storage solutions around $5,000, and networking equipment for another $3,000. |

Ongoing Operational Costs

Beyond the initial investment, ongoing operational costs are a key factor to consider. These costs can vary significantly depending on the chosen solution and the scale of your BI operations.

Here’s a breakdown of typical ongoing expenses:

- Cloud-Based BI: Subscription fees (often scalable based on usage), potential additional charges for increased storage or data processing, and support costs.

- On-Premise BI: IT staff salaries for maintenance and support, hardware maintenance and repairs, software updates and upgrades, electricity costs for servers, and potential costs for security and backup solutions. For instance, maintaining a server room can cost several thousand dollars annually in electricity alone.

Scalability of Costs

As your data volume grows and user needs increase, the cost implications of each solution diverge.

Cloud-based BI offers a more predictable and scalable cost structure. As your data expands, you can typically increase your subscription level to accommodate the growth, paying only for the resources you consume. This allows for greater flexibility and avoids the large capital expenditures associated with scaling on-premise infrastructure. On the other hand, scaling an on-premise BI solution can be significantly more expensive.

It may involve purchasing additional hardware, upgrading existing infrastructure, and increasing IT staff to manage the expanded system. This often involves significant upfront investments and potential disruptions during upgrades.

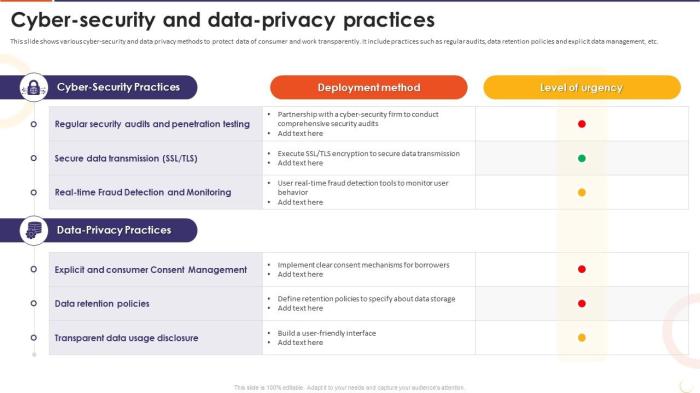

Data Security and Compliance

Choosing between cloud-based and on-premise Business Intelligence (BI) solutions often hinges on critical considerations beyond just cost. Data security and compliance are paramount, demanding careful evaluation of each approach’s strengths and weaknesses. This section delves into the security measures and potential vulnerabilities inherent in both cloud and on-premise BI deployments.Cloud-based BI solutions typically boast robust security features designed to protect sensitive data.

These measures are often managed by the cloud provider, reducing the burden on the organization itself. However, understanding the specifics of these security measures and the potential limitations is vital for informed decision-making.

Cloud-Based BI Security Measures

Cloud providers invest heavily in security infrastructure. Data encryption, both in transit and at rest, is a standard practice. This means data is scrambled during transmission and stored in an unreadable format, protecting it from unauthorized access even if a breach occurs. Access control mechanisms, such as role-based access control (RBAC), allow administrators to granularly manage user permissions, ensuring only authorized personnel can access specific data sets.

Many cloud providers also offer compliance certifications, such as ISO 27001, SOC 2, and HIPAA compliance, demonstrating their commitment to data security and regulatory adherence. These certifications signify that the provider has met specific security and privacy standards, providing an extra layer of assurance to clients. For example, a healthcare organization choosing a cloud BI solution would likely prioritize a provider with HIPAA compliance certification.

On-Premise BI Security Measures

On-premise BI solutions require organizations to manage their security infrastructure directly. This involves implementing physical security measures, such as secure data centers with access controls and surveillance, to prevent unauthorized physical access to servers and hardware. Robust network security protocols, including firewalls, intrusion detection systems, and regular security audits, are essential to protect against cyber threats. Data backup and disaster recovery strategies are crucial, ensuring business continuity in case of hardware failure or data loss.

Regular data backups, stored both on-site and off-site, are a best practice. Consider a scenario where a company experiences a server failure. With a comprehensive backup strategy, data recovery can be swift, minimizing disruption to operations.

Potential Security Risks and Vulnerabilities

Understanding the potential risks associated with each approach is crucial for mitigating vulnerabilities.

- Cloud-Based BI Risks:

- Vendor lock-in: Migrating data away from a cloud provider can be complex and costly.

- Data breaches at the provider level: While providers invest heavily in security, they are still susceptible to breaches, impacting all their clients.

- Compliance concerns: Ensuring the provider meets all necessary compliance standards for your industry is critical.

- On-Premise BI Risks:

- Higher initial investment costs: Setting up and maintaining on-premise infrastructure is expensive.

- Increased maintenance burden: Organizations are responsible for all aspects of security and maintenance.

- Limited scalability: Expanding capacity requires significant upfront investment.

- Physical security vulnerabilities: On-site data centers are vulnerable to physical breaches, natural disasters, and power outages.

Deployment and Implementation

Choosing between cloud-based and on-premise Business Intelligence (BI) solutions significantly impacts deployment and implementation timelines and required technical expertise. Understanding these differences is crucial for aligning your BI strategy with your business needs and resources. This section breaks down the key differences to help you make an informed decision.

The deployment and implementation phases for both cloud and on-premise BI solutions differ significantly in terms of time commitment, technical skill requirements, and integration complexity. While cloud solutions generally offer faster deployment, on-premise solutions provide greater control but require more extensive upfront investment.

Deployment Timelines

The time required to deploy and implement a BI solution varies greatly depending on factors like data volume, system complexity, and the chosen vendor. However, general estimations can be made to illustrate the differences between cloud and on-premise approaches.

| Deployment Type | Estimated Timeline |

|---|---|

| Cloud-Based BI | 2-8 weeks (depending on data migration complexity and customization needs. A simple implementation with pre-built dashboards might take as little as 2 weeks, while a complex integration with multiple data sources could take up to 8 weeks.) |

| On-Premise BI | 8-24 weeks (This longer timeframe accounts for hardware procurement, software installation, data migration, configuration, and extensive testing. Complex deployments can extend beyond 24 weeks.) |

Technical Expertise Required

The technical expertise needed for successful implementation differs considerably between cloud and on-premise BI solutions. Cloud solutions often require less specialized technical skills, while on-premise deployments demand a more comprehensive skillset.

Cloud-based BI typically requires personnel with expertise in data integration, cloud platforms (like AWS, Azure, or GCP), and the specific BI tool being used. A strong understanding of data modeling and basic SQL skills are beneficial. On the other hand, on-premise solutions demand a more extensive IT team with expertise in server administration, database management (SQL Server, Oracle, etc.), network security, and the specific BI software.

They also need to handle tasks like system maintenance, backups, and upgrades.

Integration with Existing Enterprise Systems

Integrating your BI solution with existing enterprise systems is a critical step for maximizing its value. The integration process differs depending on whether you choose a cloud or on-premise solution.

Further details about The impact of CRM on sales forecasting accuracy and revenue generation is accessible to provide you additional insights.

Cloud-Based BI Integration:

- Data Source Identification: Identify all relevant data sources within your enterprise systems (CRM, ERP, databases, etc.).

- API Connectivity: Leverage APIs provided by your cloud BI platform and existing systems to establish data connections. Many cloud BI tools offer pre-built connectors for common enterprise applications.

- Data Transformation: Transform and cleanse data to ensure consistency and accuracy. Cloud-based ETL (Extract, Transform, Load) tools can automate this process.

- Security Configuration: Secure data connections and access controls according to your enterprise security policies.

- Testing and Validation: Thoroughly test the integration to ensure data accuracy and reliability.

On-Premise BI Integration:

- Data Source Assessment: Conduct a comprehensive assessment of all data sources to understand their structure and accessibility.

- Database Connectivity: Establish secure database connections between your on-premise BI server and your existing enterprise databases.

- ETL Process Development: Develop and implement a robust ETL process to extract, transform, and load data into your on-premise BI data warehouse.

- Data Modeling and Design: Design a data warehouse schema that optimizes data access and performance.

- Security Implementation: Implement robust security measures to protect sensitive data, including network security, access controls, and data encryption.

- Testing and Validation: Rigorous testing is crucial to ensure data accuracy and system stability.

Scalability and Flexibility

Choosing between cloud-based and on-premise Business Intelligence (BI) solutions often hinges on a company’s growth trajectory and adaptability needs. Both offer unique strengths, but their approaches to scalability and flexibility differ significantly, impacting a business’s ability to respond to evolving data volumes and changing market demands.Cloud-based BI solutions generally exhibit superior scalability compared to their on-premise counterparts. This stems from the inherent nature of cloud infrastructure, which allows for seamless resource allocation based on real-time needs.

On-premise systems, on the other hand, require significant upfront investment in hardware and infrastructure, limiting their capacity for rapid expansion.

Scalability of Cloud and On-Premise BI

Cloud BI solutions excel in handling increasing data volumes and user demands. As data grows, cloud providers automatically scale resources—computing power, storage, and bandwidth—to accommodate the increased load. This eliminates the need for manual intervention and minimizes downtime. Imagine a rapidly growing e-commerce company experiencing a sudden surge in sales during a holiday season. A cloud-based BI system would effortlessly handle the influx of data and user requests, providing real-time insights without performance degradation.

In contrast, an on-premise system might struggle to cope with such a sudden increase, potentially leading to slowdowns, crashes, or even complete system failure. Upgrading an on-premise system to handle this growth would involve significant time, expense, and disruption. This highlights the agility and cost-effectiveness of cloud scalability.

Flexibility in Customization and Integration, Comparing cloud-based and on-premise business intelligence solutions

Cloud-based BI platforms often provide a wide array of pre-built connectors and APIs, facilitating seamless integration with other business applications. This interoperability streamlines data flow and enhances the overall efficiency of the BI system. For example, a company using Salesforce for CRM and Google Analytics for web traffic could easily integrate both data sources into a cloud-based BI dashboard for a holistic view of customer behavior and marketing campaign performance.

While on-premise solutions can also be integrated with other systems, the process is typically more complex and time-consuming, requiring significant customization and potentially specialized IT expertise.Customization options vary between cloud and on-premise solutions. Cloud platforms generally offer a balance between pre-built functionalities and customization options through scripting or extensions. On-premise solutions, while offering greater control over customization, often demand more extensive development efforts and specialized skills to tailor the system precisely to unique business requirements.

Responding to Rapidly Scaling BI Needs

Consider a scenario where a startup experiences explosive growth, requiring a significant increase in its BI capabilities within a short timeframe. A cloud-based BI solution would be the ideal choice. The provider can quickly scale resources to meet the increased demand, ensuring uninterrupted access to data and insights. The startup could easily add more users, increase data storage, and enhance processing power without lengthy hardware procurement or complex infrastructure upgrades.

Conversely, an on-premise system would necessitate a substantial investment in new hardware, software licenses, and potentially additional IT staff, delaying the expansion of BI capabilities and potentially hindering the company’s rapid growth. The cloud’s elasticity allows for rapid adaptation, offering a competitive advantage in dynamic market conditions.

Maintenance and Support

Choosing between cloud-based and on-premise Business Intelligence (BI) solutions involves careful consideration of ongoing maintenance and support needs. The responsibility for upkeep, updates, and troubleshooting differs significantly between these two models, impacting both cost and operational efficiency. Understanding these differences is crucial for making an informed decision that aligns with your organization’s resources and priorities.The level of maintenance required, and who’s responsible, differs dramatically between cloud and on-premise BI solutions.

Cloud solutions generally offer a more hands-off approach, while on-premise deployments demand dedicated IT resources. This difference extends to software updates, hardware maintenance, and security patching, significantly affecting operational costs and IT team workloads.

Maintenance Responsibilities

The following table summarizes the key maintenance responsibilities for each BI solution type:

| Task | Cloud-Based BI | On-Premise BI |

|---|---|---|

| Software Updates | Vendor managed and automatically deployed (typically). | IT team responsible for scheduling, downloading, testing, and deploying updates. |

| Hardware Maintenance | Vendor managed; no on-site hardware to maintain. | IT team responsible for server maintenance, backups, and potential hardware replacements. |

| Security Patches | Vendor managed and automatically applied (generally). Regular security audits may be conducted by the vendor. | IT team responsible for applying patches, monitoring security logs, and implementing security measures. |

| Database Administration | Typically managed by the vendor, although some configurations may require internal expertise. | Internal DBA team responsible for database performance tuning, backups, recovery, and security. |

| Data Backup and Recovery | Vendor handles backups and disaster recovery, often with service level agreements (SLAs) guaranteeing recovery time objectives (RTOs) and recovery point objectives (RPOs). | IT team responsible for implementing and managing backup and recovery strategies, including offsite storage. |

Vendor Support Levels

Cloud-based BI vendors typically offer comprehensive support packages with varying levels of service. These often include 24/7 support, defined response times (e.g., within 4 hours for critical issues), and SLAs guaranteeing uptime and resolution times. On-premise BI solutions usually rely on vendor support contracts, but the level of support and response times can vary greatly depending on the contract and the vendor’s resources.

Smaller vendors might offer limited support hours or longer response times compared to larger, established companies.

Common Maintenance Issues and Resolution

A common issue in both environments is software bugs or performance bottlenecks. In cloud-based solutions, these are often addressed by the vendor through updates or hotfixes, with notifications and minimal disruption to users. On-premise solutions require internal IT teams to diagnose and resolve such problems, potentially involving troubleshooting, patching, or even contacting the vendor for support. Another frequent challenge is data corruption.

Cloud providers usually have robust data backup and recovery mechanisms, minimizing data loss. On-premise solutions require meticulous backup procedures and a well-defined disaster recovery plan to mitigate data loss risks. For example, a large retailer using an on-premise BI system experienced a server failure leading to a temporary outage. Their IT team, following their disaster recovery plan, restored the system from backups within 6 hours, minimizing business disruption.

However, a smaller company might not have the resources for such robust recovery, leading to longer downtime.

Vendor Lock-in

Choosing a business intelligence (BI) solution, whether cloud-based or on-premise, is a significant decision with long-term implications. One crucial factor often overlooked is the potential for vendor lock-in – the difficulty and cost associated with switching providers once a system is in place. Understanding the risks and mitigation strategies for both cloud and on-premise solutions is vital for ensuring future flexibility and cost-effectiveness.Vendor lock-in can manifest in various ways, from proprietary data formats and integrations to complex contract terms and the sheer effort required to migrate data and functionality to a new platform.

This can severely restrict your options, limiting your ability to negotiate better pricing, access innovative features, or adapt to changing business needs. The implications for both future flexibility and long-term costs can be substantial, potentially hindering growth and competitiveness.

Vendor Lock-in in Cloud-Based BI Solutions

Cloud-based BI solutions, while offering many advantages, can also lead to vendor lock-in. The reliance on a single provider’s infrastructure, software, and services creates a dependency that can be difficult to break. For example, a company deeply integrated with Salesforce’s BI tools might find it extremely challenging and expensive to migrate to a different platform. This is especially true if data is stored in a proprietary format or if custom integrations have been developed.Mitigation strategies for cloud-based BI solutions are essential to prevent being trapped by a vendor.

The following approaches can significantly reduce the risk:

- Choose open standards: Opt for solutions that utilize open data formats (like CSV or JSON) and support open APIs. This allows for easier data export and integration with other systems.

- Regularly assess the market: Stay informed about alternative BI providers and their offerings. Periodic reviews will help identify potential replacements and assess the feasibility of switching.

- Negotiate flexible contracts: Avoid long-term contracts with strict exit clauses. Negotiate terms that allow for easier termination or migration with reasonable notice periods.

- Data portability planning: Develop a data migration strategy upfront. This involves regularly backing up data to a neutral format and testing the portability of your data to alternative platforms.

Vendor Lock-in in On-Premise BI Solutions

While on-premise solutions offer greater control, they also carry the risk of vendor lock-in, albeit in different ways. This can stem from the reliance on a specific vendor’s hardware, software licenses, and specialized expertise for maintenance and support. Migrating to a new system might involve significant upfront costs for new hardware, software licenses, and extensive re-training of staff.

Furthermore, the integration of on-premise BI systems with other internal systems can create dependencies that are difficult to disentangle.Mitigating vendor lock-in for on-premise BI solutions requires a proactive approach focusing on flexibility and future-proofing. Consider these strategies:

- Open-source components: Incorporate open-source software components where possible. This reduces reliance on a single vendor for specific functionalities.

- Modular architecture: Design your system with a modular architecture, allowing for the gradual replacement of individual components without impacting the entire system.

- Standard hardware: Use industry-standard hardware rather than proprietary equipment from a specific vendor. This increases flexibility in choosing future hardware providers.

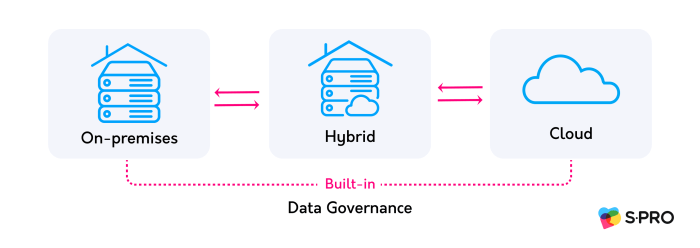

- Data standardization: Maintain data in widely accepted formats and develop a robust data governance framework to ensure data consistency and portability.

Data Integration Capabilities: Comparing Cloud-based And On-premise Business Intelligence Solutions

Choosing between cloud-based and on-premise Business Intelligence (BI) solutions often hinges on how effectively each handles data integration. Both offer robust capabilities, but their approaches and strengths differ significantly, impacting factors like speed, scalability, and cost. Understanding these differences is crucial for selecting the right BI solution for your specific needs.Data integration involves consolidating data from disparate sources—databases, spreadsheets, cloud apps, and more—into a unified view for analysis.

Cloud-based solutions generally excel at handling diverse data sources due to their inherent flexibility and pre-built connectors, while on-premise solutions require more manual configuration and potentially custom development for seamless integration. However, on-premise systems can offer deeper control over data integration processes for organizations with highly specific requirements or sensitive data.

Cloud-Based BI Data Integration

Cloud-based BI platforms often boast extensive pre-built connectors for a wide range of data sources. This simplifies the integration process, allowing users to connect to various databases (SQL Server, Oracle, MySQL), cloud applications (Salesforce, Google Analytics, Marketo), and file formats (CSV, Excel) with minimal effort. Many cloud platforms also support ETL (Extract, Transform, Load) processes through managed services, automating the data cleaning and transformation steps.

For instance, a marketing team could easily integrate data from their CRM, email marketing platform, and website analytics to gain a holistic view of customer behavior without extensive technical expertise. The scalability of cloud services also means that as the volume of data increases, the integration process can typically adapt without major infrastructure changes.

On-Premise BI Data Integration

On-premise BI solutions require more hands-on involvement in data integration. While they often support various data sources, establishing connections typically demands more technical expertise and custom coding. ETL processes often need to be built and managed in-house, which can be both time-consuming and resource-intensive. For example, integrating data from a legacy system might involve developing custom scripts or connectors, requiring significant IT resources.

While this approach allows for granular control over data transformation and security, it adds complexity and potentially increases the overall cost and implementation time. Scaling the integration process in an on-premise environment often involves significant hardware upgrades and infrastructure adjustments.

Data Integration Scenario: A Hypothetical Example

Imagine a retail company with data spread across several systems: a central SQL Server database for sales transactions, a separate CRM system for customer information, and various spreadsheets containing marketing campaign results. A cloud-based BI solution would likely offer pre-built connectors to seamlessly integrate all these sources. The user could configure these connections through a user-friendly interface, with minimal coding required.

In contrast, an on-premise solution would require more intricate setup, possibly involving custom ETL scripts or middleware to bridge the gap between the disparate systems. This could necessitate the involvement of dedicated IT personnel and potentially lead to a longer implementation timeframe. Furthermore, scaling to accommodate future data growth would be more complex and costly with the on-premise approach, requiring significant upfront investment in additional hardware and software.